LEADERS MAKE DECISIONS

…Decisions in the Computer Age

WHAT TO DO WITH BIG DATA

…and how does it affect the ethics of a leader’s decisions?

“There were 5 exabytes[1] of information created between the dawn of civilization through 2003, but that much information is now created every two days.” ~ Eric Schmidt, Executive Chairman at Google

As a DS at Officer Training School (Point Cook), I was once assessing a Key Point Commander, during the “big attack”. All of the section commanders were great, comms were excellent. The 2IC updates were both timely and accurate. It was almost like reading directly from the DS scenario sheet. Everyone was doing their job….and well...apart for the leader. No actions were taken, no orders were given, no calls for QRF or firefighting teams. It was an absolute zero as far as decisions made by the leader.

Contributing factors were many, not the least being, my lack of invisibility (Course Director is here assessing). The lack of familiarity with the task certainly did not help. Yet in the end, data flow was a huge issue. There was too much data available to be processed (particularly an inexperienced commander). And it just kept coming. The OODA loop got stuck in a continuous Observe- Orient cycle, and never broke out, to allow for the Decide and Act to occur.

So the amount of data is the first part of the modern leader’s decision dilemma?

Just how much data do you need? Do you apply the 80% rule? Well…… 80% of 5 exabytes is still 4 exabytes. So naturally some data must be excluded. What data? Who decides? Does the leader know who has decided and what has been excluded? (or why?) If so how could that affect their decision?

The movie, “Eye in the Sky”, neatly brings together consideration of the ‘Trolley Problem’ and the idea of data filtering. If you consider the data[2] upon which Colonel Catherine Powell (Helen Mirren) is making decision, has already been filtered (some by analysts, and some by Artificial Intelligence), prior to being presented. So the ethical dilemma of the trolley problem is exacerbated, by both the amount of data available, and the way in which this data has been ‘refined’.

So data filtering adds to the issue?

What issues are there with data filtering? As clearly the data must be filtered. There is just too much of it, and too much data strains or breaks leader’s OODA loop. Delaying or complicating decisions. Additionally individuals are highly unlikely to possess the analytic skills and subject matter knowledge to perform all of this filtering themselves, let alone have enough time to filter through that 4 exabytes of data to extract the pearls upon which to make a solid ethical decision.

THE TROLLEY PROBLEM AND BIG DATA

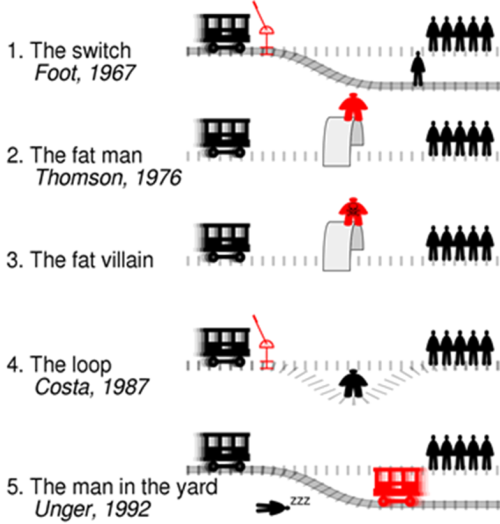

We all know the Trolley problem, and the ethical dilemma of who to save…do we change the path of the trolley? Do we save the one good person or switch tracks to the five criminals? Do we push the fat guy onto the tracks?

Well big data only exacerbates these scenarios.

Just how good is the good person? You can get their life data.

Just how bad are the criminals? Are they due for release? Have they been rehabilitated? What about their families? All the data is there.

What of the fat man? Is he a villain? Does he have a genetics issue? Has he been in for a gastric sleeve? Has he just discovered a genetic trigger to burn off excess weight? Why is ‘Fat’ an issue?

Still the same sort of ethical considerations; It’s just more data equals more dilemmas.

So they must rely on others and many analysis tools to supplement their decision making process.

Therein lies another issue for the decision maker, another complication in the trolley problem. In Colonel Powell case, she was serving with a coalition operation, at the top of a decision chain. A Chain which may have been affected by bias within the data analysis from any number of coalition partners. This bias may have been as simple as slightly different Rules of Engagement or a more complex issue, where cultural bias may affect the interpretation of the data and the identification of a legitimate target. Biases which may be unknowingly super-imposed on top of her own conscious (and subconscious) thought processes.

When this is coupled with the time critical nature of the requirement for near real time decision making…then mistakes can be made, and not just in movies…..

Whilst all efforts were made to identify the target of this Royal Australian Air Force mission in Syria, (As reported by the ABC News) the US Military-led investigation found that ‘Unintentional human errors’ resulted in the death of 83 members of forces aligned to the Syrian Government.’

Every effort had been undertaken to ensure the target was legitimate. However, the most important piece of data was not available at the time the decision was made. The human in the loop had not been able to establish that the forces were friendly, therefore they were unfriendly; hence a legitimate target. The human in the loop created interpretation errors. Incorrect data to the decision maker; tragic results.[3][4].

So what about computers, and AI, that will solve the issue wont it?

AI and machine learning techniques must be applied to that ‘4 exabyte’ Big Data Problem. The modern leader/decision maker has no realistic hope gaining sufficient evidence on which to make an informed decision, without the aid of modern computing applications. Even supported by a large number of analysts, their efforts will be based on data that has been filtered through multiple levels of AI decision support tools. Yet, shouldn’t this give the leader a greater level of confidence in the data upon which a decision is made?

A study at MIT into facial recognition conducted by Joy Buolamwini found:

“the three programs’ error rates in determining the gender of light-skinned men were never worse than 0.8 percent. For darker-skinned women, however, the error rates ballooned — to more than 20 percent in one case and more than 34 percent in the other two.”

This considerable variation from the claimed 97% accuracy rate.

“What’s really important here is the method and how that method applies to other applications,” says Joy Buolamwini, a researcher in the MIT Media Lab’s Civic Media group and first author on the new paper. “The same data-centric techniques that can be used to try to determine somebody’s gender are also used to identify a person when you’re looking for a criminal suspect or to unlock your phone. And it’s not just about computer vision. I’m really hopeful that this will spur more work into looking at [other] disparities.”

The results on Google are more than easy to find. It is not a huge big brother conspiracy theory. The AI issue boils down to biases within the data sets used, or within the algorithms used to analyse the data collected (or worse both). Rather than the assumed ‘self-learning systems will correct these flaws in the data sets’, multiple studies have found that biases within the data analysis algorithms can actually reinforce the data set errors.

In each case biases within the AI data filtering, provides flawed inputs to the decision maker. Hence flawing the ‘Orient’ process of the OODA cycle, and as a consequence the ‘Decide’ and ‘Act’ are likewise deficient. So…..the ethical dilemma of ‘The Trolley Problem’ has been overwhelmed by the amount of data available and how that data is processed.

Importantly, Amazon scrapped their AI recruitment tool, when it was found that their AI data filtering was so internally biased it specifically eliminated female applicant from middle and senior management positions. Likewise numerous US policing agencies scrapped facial recognition systems, when it was found that the predictive algorithms racially profiled suspects based on socially biased historical neighbourhood data.

The United Nations Committee on the Elimination of Racial Discrimination stated:

“ that artificial intelligence in decision-making “can contribute to greater effectiveness in some areas” but found that the increasing use of facial recognition and other algorithm-driven technologies for law enforcement and immigration control risks deepening racism and xenophobia and could lead to human rights violations.”

So, how do you know if the AI tools your organisation is using are not likewise flawed?

In summary…..Leadership theory tells us that we must be aware of our internal bias[5], when we make our ‘Trolley Problem’ decisions. Yet in our modern computer age, it is not just our own biases that need concern us. The systems and the tools that we use to simplify complex situation may also be introducing bias into our decision making process. So this system bias needs to be accounted for. A system bias that the decision maker may not be aware of, let alone understand.

…would the all decisions were only as complex as the simple ‘Trolley Problem’.

Comments

Start the conversation by sharing your thoughts! Please login to comment. If you don't yet have an account registration is quick and easy.